In the first installment of this blog series I shared a virus-centered autobiography. Some of the inspiration for telling this story was the realization that in addition to the normal experience of viruses with immunizations, childhood diseases, and adult viral infections that my experience as a researcher at Purdue University and at the University of Oregon was filled with virus encounters. Since we are all inundated with news about the current CoViD-19 pandemic, this may be a timely reflection.

I was born in 1958. My very first encounter with the virus world is via the recommended vaccine schedule at the time. This included vaccinations against diphtheria, tetanus, pertussis (DTP), smallpox, and polio. Since diphtheria, tetanus, pertussis are bacterial infections and our focus is on viruses, I will not discuss these diseases. Nonetheless, how our immune system works, the main topic of this post, is more or less the same for bacterial and viral infections.

Smallpox had a 30% mortality rate and even higher among infants. Fever, vomiting, followed by mouth and skin sores that led to significant scarring and sometimes blindness were the symptoms and effects. Smallpox was spread by person to person contact and via contaminated objects. Polio has a 2-5% mortality rate among infected children and a 15-30% mortality rate among infected adults. Although many infected people experience no symptoms, weakness and paralysis of the legs is common. Recurrence of muscle weakness and paralysis can occur years later in post-polio syndrome. My father-in-law experienced polio as a toddler; he experienced the effects of polio the rest of his life. Globally, the number of cases of polio today are in the few hundred compared to millions before the vaccine was developed. Preventing and nearly eliminating such deadly and contagious diseases has been invaluable to public health.

Most of us have a sense of how immunity to infectious diseases works. We get exposed to the virus and our body’s immune system creates a resistance to future exposure to the virus. After being exposed once, we are immune in the future. Exposure comes by experiencing the disease and recovering from it—this is how I developed my current immunity to measles, mumps, rubella, and chicken pox. Alternatively, you can be exposed to the virus with a vaccine, an injection or an oral dose that contains a fragment of the virus or an inactivated or less virulent form of the virus. Your immune system responds as if it had experienced the disease and develops immunity so that you are resistant to future exposure to the real thing.

The modern era of vaccinations began in the 1700s when doctors and scientists noticed that dairy farmers who were exposed to cowpox, a milder disease that is similar to smallpox, did not develop smallpox. Edward Jenner is often named as the person behind these early efforts although there is some debate as to whether he was actually the first. These doctors deliberately began to expose people to the pus from cowpox blisters. They would contract cowpox, but would also gain an immunity to the deadlier smallpox. Modern versions of the smallpox vaccine continued this basic idea. Live cowpox virus (not smallpox itself) was used to elicit the immunity to the smallpox virus. Because smallpox has been eradicated among humans, this vaccine is no longer administered.

There are two forms polio vaccine used. For people my age the most common form was the oral polio vaccine (OPV) which is a weakened version of the virus where many mutations were introduced in the course of growing the virus. These mutations led to an attenuated form of the virus which conveyed immunity but which did not cause the disease (although three in a million OPV doses result in an active polio infection). The vaccine commonly used today is the inactivated polio virus (IPV) which is given by injection. Live virus is inactivated with formaldehyde/formalin.

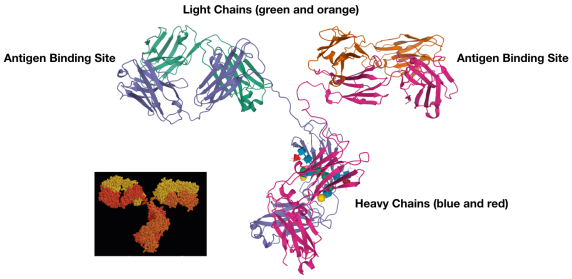

Figure 1. The three-dimensional structure of a mouse antibody (1IGT) from the Research Collaboratory for Structural Bioinformatics (RCSB) Protein Database (PDB). The protein consists of four chains, two heavy chains (each with about 400 amino acids) and two light chains (each with about 200 amino acids). The representation shown here is known as a ribbon diagram where the polypeptide chain is traced and elements of structure highlighted (for example, beta strands shown by the flat arrows). The antigen binding site is made from the variable regions of a heavy and a light chain to give a unique binding site that recognizes a specific antigen. The inset shows the space-filling representation of the same molecule shown in a similar orientation. Many people are familiar with the V-shaped H2O molecule that has 3 atoms. The antibody has about 20,000 atoms.

The immune response in humans comes from two cell types: B cells and T cells. B cells are named after the bursa organ in birds where they were first discovered. Humans don’t have bursa—in humans B cells are made in the bone marrow (which conveniently also starts with the letter B). T cells come from the thymus, an immune and endocrine system organ located between the heart and the sternum. B cells make antibodies. Antibodies are protein molecules that recognize and bind to foreign substances (known as antigens) that are parts of viruses or other pathogens. See Figure 1. This recognition is very specific and is based on the three dimensional structure of the antigen and the antibody. The way a key fits into a lock or a hand in a glove is a good image. Molecules are more flexible and dynamic than a mechanical lock and key which results in a very specific and tight interaction between the antigen and the antibody. This is sometimes referred to as an induced fit. The binding of the antibody prevents some aspect of the virus’s normal action. Antibodies can bind to a viral protein responsible for attaching to the host cell. A virus with an antibody bound would not be able to infect a cell. Alternatively, antibody binding to a virus tags the foreign pathogen or substance to be destroyed by other immune system cells that engulf and digest foreign substances.

The way our body creates these antibodies to the foreign molecules is remarkable. We do not have enough genes to make antibodies against every possible foreign molecule found on the surface of a pathogen (parasite, bacteria, virus, or toxins produced by a pathogen). But in our genome there are sections of DNA that combine in different ways to make the gene in a given mature B cell that codes for the antibody. Slight errors in the recombination process introduces additional mutations that result in even more permutations. Once matured each B cell produces only one antibody that recognizes a section of a foreign protein or carbohydrate. This way we make millions of different B cells each producing a unique antibody which can target millions of different foreign substances. The initial production of B cell is totally random, but chances that one or several of these would recognize the foreign substance is high. Initially, the antibodies are embedded in the B cell membrane with the antibody binding region sticking out from the surface of the B cell. A B cell with a successfully binding antibody is signaled to proliferate in a process known as clonal selection. These B cells start producing antibodies missing the membrane binding region like the structure shown in Figure 1 that will be secreted and can bind to the foreign substance. This response that is specific to the infectious pathogen takes several days to kick in. Before then, if we have never been exposed to that particular pathogen, we are at the mercy of its effects and our body’s more general response to pathogens. Some of the symptoms that we associate with sickness—inflammation, fever, swelling, increased blood flow, pain—are actually the workings of the innate immune system, the part of the immune system that does not rely on antibody recognition.

In addition to these antibody producing cells (known as the humoral response), some B cells become memory cells. These cells produce antibodies very quickly if exposure to the pathogen occurs a second time. This is the main cellular basis for immunity, this memory and rapid response to a previously seen antigen.

T cells recognize presented antigens and signal the cell-mediated immune response. The cell-mediated immune response produces cytotoxic T cells which recognize and kill infected cells. T cells also stimulate the B cell response. Some cells of the immune system or even infected cells absorb pathogens and digest them into small fragments, e.g. peptides 10-15 amino acids long or fragments of surface carbohydrates. These small fragments bind to a membrane embedded protein called the major histocompatibility complex (MHC) protein. The MHC presents the antigen at the outer surface of the cell. MHC plus the presented antigen is what is recognized by T cell antigen recognition molecules that are similar to antibodies. T cell specificity and variability is generated similarly to how it is generated in B cells. Some T cells remain as memory T cells that result in a more rapid immune response in case the antigen is encountered again.

Since the production of antibodies and other antigen recognizing proteins involves the random combination of pieces of the antibody genes, it is possible that there will be B cells and T cells generated that recognize molecules that are not foreign antigens but are self. Remarkably, the immune system has mechanisms by which these cells self-destruct or are inactivated.

The B cell and T cell response is called the adaptive immune response (in contrast to the innate immune response) because the response is specific to a given antigen and happens only when the given antigen is encountered. Our immune system adapts to the pathogen filled environment that we experience, that is, it changes in response the the environment. Specific B cell and T cell responses to a given antigen occur when exposure to a live or an active pathogen occurs, i.e. when the patient actually catches the disease, Alternatively, the specific B cell and T cell responses also occur when the patient is vaccinated—a deliberate exposure to an inactive form of the pathogen or to a non-infectious fragment of the pathogen. In the first case the person experiences the disease and its consequences but then is immune to further infections. In the second case the person gains immunity without ever having experienced the disease. The second case is surely better, especially when there are significant risks associated with experiencing the disease itself. A significant part of vaccine testing is making sure that the risk associated with the vaccine (side effects, the effect of manufacturing and formulation components, a mild infection from an attenuated form of the pathogen, etc.) is significantly less than the disease. The cure can’t be worse than the disease.

Some of the memory cells last for the entire lifespan of the vaccinated individual. Others do not and a booster shot is necessary to keep the immune system active against the previously exposed antigen. In other cases the virus evolves in such a way that the original antibodies no longer recognize it. Really sneaky pathogens have mechanisms that vary their coat proteins systematically to evade the immune system. The early stages of vaccine development in the case of CoViD-19 have been straightforward, but on an amazingly fast track. Scientists isolated fragments of the coat protein of the virus particle and injected people with it in hopes of producing the immune response described above. Part of the early phase testing is to see if there are any side effects, but part of the testing is to see if the antibodies produced by the initial antigen exposure are actually effective in stopping the disease.

The serum antibody testing that has now been developed to see who already has had the disease detects antibodies in our blood that are specific to the CoViD-19 virus particle. Since there is no vaccine, the only way to have those antibodies is to have had the disease. In principle, testing for the anti-CoViD-19 antibody gives a clear result. People with the antibody have had the disease. In practice it’s not so clear. A small percentage (~5%) of false positives, antibodies to CoViD-19 being present without having had the disease, obscures these results. We also hear about treating a CoViD-19 infected patient with plasma (blood where the red blood cells and other cells have been removed) from a previously infected person. If these antibodies are injected into an infected patient, they could inactivate the virus in the same way that they would if the patient were making their own (which they eventually will). This is known as passive immunity.

Denis O. Lamoureux’s argument in Evolution: Scripture and Nature Say Yes! can be summarized in three points:

Denis O. Lamoureux’s argument in Evolution: Scripture and Nature Say Yes! can be summarized in three points: